Critical infrastructure is essential to the United States’ economic and national security, global competitiveness, and overall quality of life. Sixteen sectors make up critical infrastructure, ranging from manufacturing to energy and defense.

These built structures and systems keep our world running. When they are inefficient or fail, our communities face negative consequences, including public health and safety issues and environmental catastrophes.

It is essential to ensure the reliability and security of these structures through data-driven industrial maintenance methods.

Growing Concerns for the Built Environment

Despite the high stakes of infrastructure failure, minimal data exists on the health of the built environment.

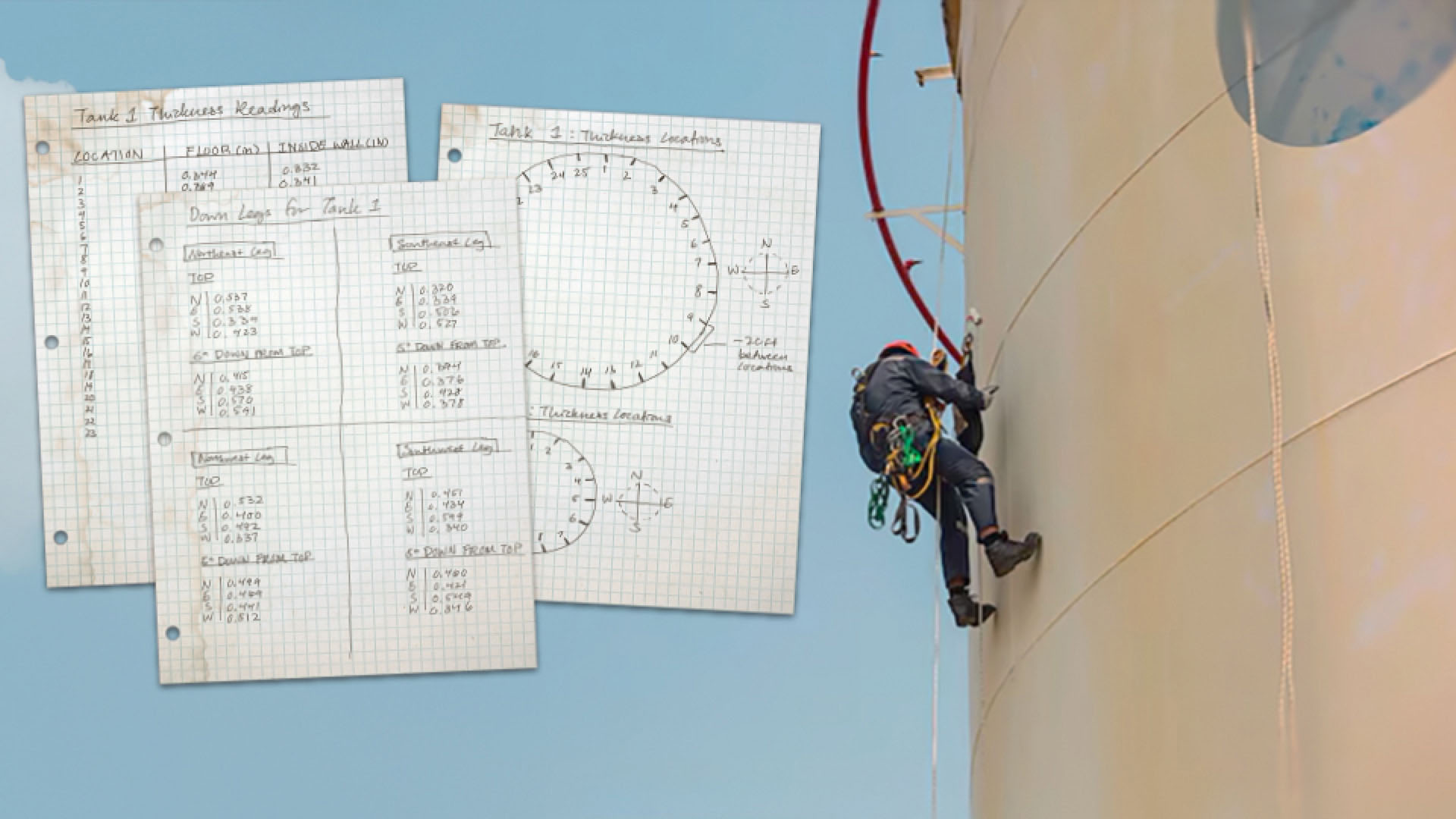

Imagine making life-threatening maintenance and repair decisions based on handwritten drawings or spreadsheets. Or worse, imagine having to react and respond to a failure. This is the reality for those who operate and maintain today’s critical infrastructure.

As a result, our nation’s critical infrastructure is unreliable; the physical assets that people depend on every day could become inoperable at any moment.

According to the 2025 Infrastructure Report Card released by the American Society of Civil Engineers (ASCE), America’s infrastructure received an overall C-grade rating – the same grade the U.S. received in 1988.

While numerous sectors are on the cutting-edge of innovation and technology advancement, it is clear that some of our most critical infrastructure sectors have been left behind.

For example, U.S. electric demand is forecasted to increase significantly over the next four years. However, nearly two-thirds of our nation’s power grid is more than 25 years old. It is no coincidence that power disruptions are on the rise as we rely more and more on rapidly aging infrastructure.

If our current infrastructure is crumbling, why not build new infrastructure? We simply do not have the time or resources to start from scratch.

Our best option is to extend the lifespan of existing infrastructure. To do so, we must modernize our infrastructure maintenance and repair methods by prioritizing high-quality data.

Antiquated Industrial Maintenance Methods

Traditional methods for critical infrastructure maintenance are outdated and insufficient.

At Gecko Robotics, we refer to this conventional maintenance method as “Joe on a rope”: one person on a scaffold, collecting infrastructure data by hand.

The process is inefficient and unsafe. Moreover, manual data collection does not provide complete coverage of fixed assets, such as bridges or boilers, leaving asset health conditions unknown.

Without complete asset coverage, resources and capital are misallocated. There is not enough information to make accurate, data-driven maintenance and repair decisions.

Coupled with a skilled labor shortage, extreme weather, and fiscal uncertainty, the future of America’s critical infrastructure is rocky. These problems we face today are a microcosm of the problems we will face in the future.

To ensure our world as we know it keeps running, we need to prioritize greater investment and innovation for the critical infrastructure sectors that make up our built world – empowering our workers and protecting our communities and nation at large.

By investing in new technologies that aim to modernize critical infrastructure maintenance methods, we have a window to save our built world from crumbling.

Fixed Asset Challenges in the Built Environment

Many factors pose challenges to capturing high-quality data on our critical infrastructure. Our built world is complex. Every fixed asset is unique with its own specs, requirements, and standard operating procedures.

External factors such as material composition, atmospherics and angles, obstruction, heights, and complex geometries create greater disparity.These uncontrollable and unpredictable variables in the real world make it difficult to replicate in a controlled simulation for research and technology development.

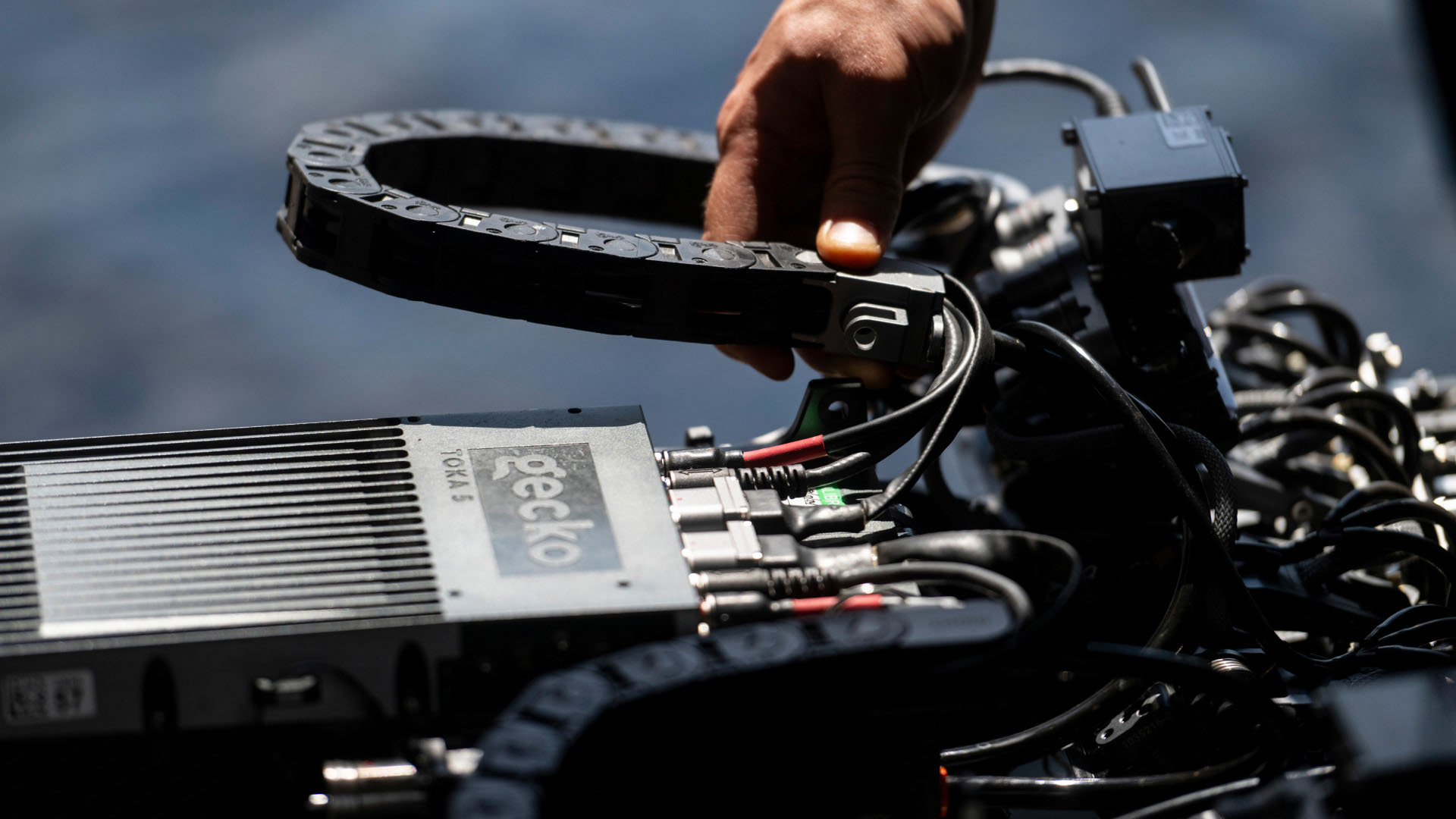

Take autonomous robotic systems, for instance. This hardware needs to be able to reliably perform actions outside of the lab. Assuming that the lab simulation is an accurate representation of the built environment, there is still high potential for unanticipated disruptions.

Consequently, the process of creating, testing, and iterating robotic systems that successfully perform a desired action is time consuming. In the built environment, developing a robotic system that collects asset health data is an order of magnitude harder than that.

Even after the robotic system reliably performs an action, there are two key challenges that need to be addressed to ensure that the data collected is high quality: localization and validation.

Localization enables a robot to understand its position and orientation within its environment. For example, self-driving cars are localized by leveraging sensors such as GPS or LiDAR to match known maps of the world.

In the context of Gecko Robotics, localization enables a robot to pinpoint asset damage. In industrial settings, localization requires novel problem-solving without consistent access to GPS satellites.

On top of that, the built world consists of a wide variety of operational domains, from shipyards and submarines to power plants and steel mills. Localization solutions for the built environment are not one size fits all.

At Gecko Robotics, we work boots-on-the-ground to advance our suite of robotic technologies to collect full-coverage, high-quality health data on the built world. With successful localization, robots that climb, crawl, swim, and fly generate data layers that meet five key criteria for data quality: accuracy, completeness, precision, reliability, and timeliness.

However, even robotically-generated data layers require clean up, or data validation, to be made useful.

Traditionally, data validation required human intervention. Today, there is enough data to train artificial intelligence (AI) algorithms to automatically and verifiably scrub robotically-collected data sets for errors and interference. Moreover, machine learning (ML) models can be used to identify and classify patterns, such as damage characterization.

Together, this robotic localization and data validation process creates “clean” data sets that can be organized into one common language for the built world. At Gecko, we call this the ontology.

The ontology is a centralized system for all data on the health of the built world. It serves as the foundation for software engineering and enables real-world outcomes in critical infrastructure sectors.

Innovating Industrial Technology and Data Quality Tools

AI is only as good as the data that trains it. Robotically-collected data sets help ensure paramount data quality for AI-powered software solutions.

In the built world, there are severe implications for poor decision making. The importance of data quality for modern solutions cannot be understated. That is why AI and robotics must be implemented together to enable data-driven decision making for critical infrastructure protection.

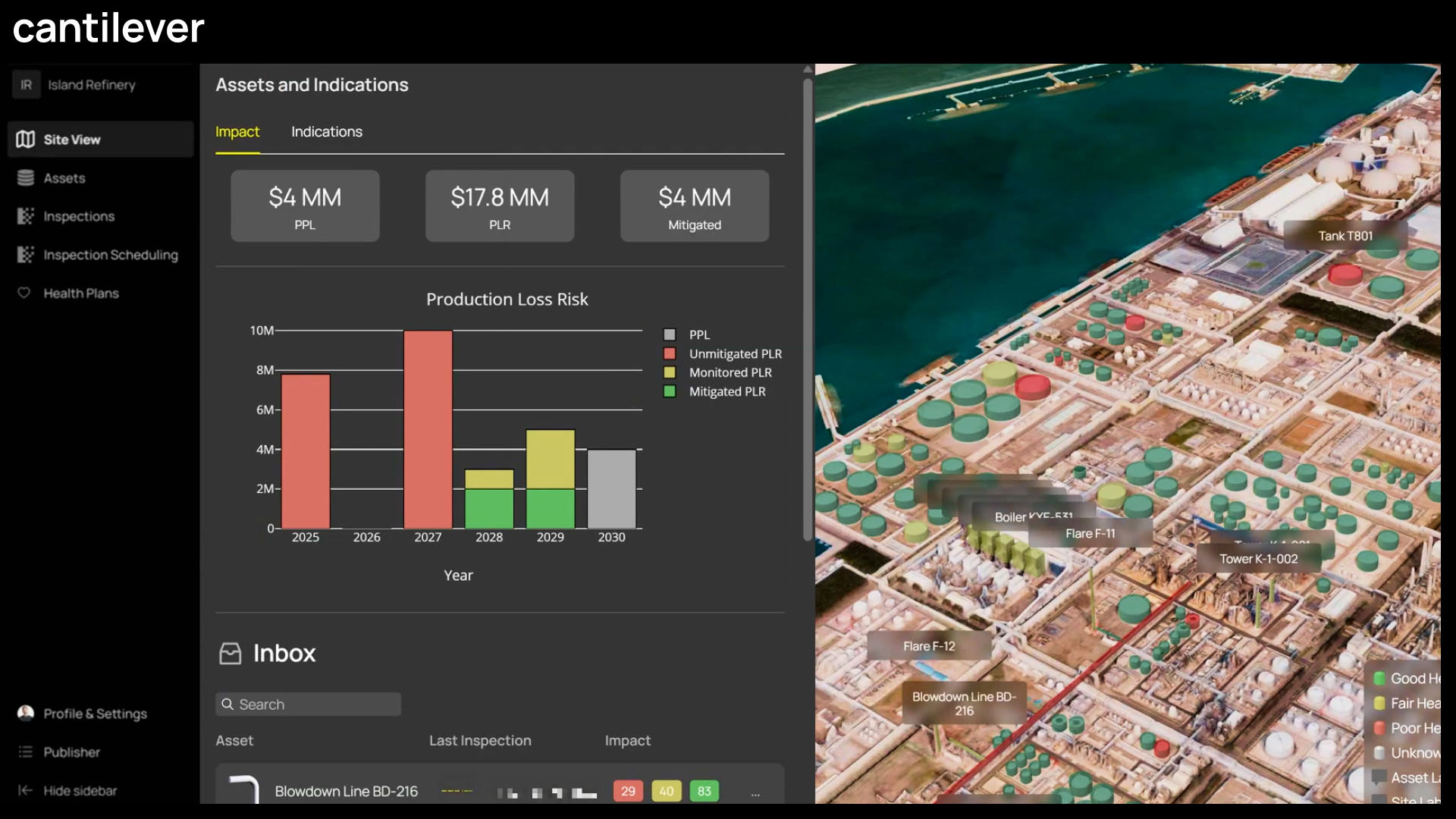

At Gecko, we work boots-on-the-ground to build technology that drives real-world outcomes in critical infrastructure sectors. Our Cantilever operating platform combines AI and robotics to generate critical workflows for the built world.

For example, a Fortune 500 mining company lacked data on its fleet of storage tanks. The storage tanks were operating decades beyond their expected useful life, which led to costly and inefficient reactive maintenance and unexpected downtime. This also put the company at risk for asset failures, such as production loss, safety hazards, and environmental damage.

Rather than replacing the storage tanks entirely or making reactive repairs, the mining company deployed AI and robotics. As a result, the mine used high-quality asset health data to pinpoint damage and predict how the assets would age over time.

The data revealed that a simple process change to the fill height could extend the storage tanks’ useful life by ten years with no additional investment or maintenance.

Not only did this insight save the company from overspending in maintenance costs, but it also helped ensure the safety of the surrounding communities and environment.

This is the power of high-quality data for the built world. By using AI and robotics, maintenance and operations teams can optimize capital planning and reduce reactive maintenance events.

When scaled, we have full visibility into the health of America’s critical infrastructure – today and into the future.

Modernizing Asset Lifecycle Management with AI and Robotics

America’s critical infrastructure is rapidly aging, and today’s asset lifecycle management methods cannot keep up. Without high-quality data and innovative technologies like AI and robotics, our communities, environment, and nation at large remain vulnerable to failures.

The physical assets within our built environment are highly complex. Traditional maintenance methods do not have the capacity to collect and utilize high-fidelity data. We must advance and implement AI and robotics to optimize maintenance, capital planning, and operations across critical infrastructure industries. Critical infrastructure sectors need innovation and new technologies to ensure their security and resilience.

By leveraging high-quality health data, we can ensure our critical infrastructure stands strong for future generations. We must use AI and robotics to drive confident decision-making at scale across critical infrastructure sectors. Learn more about the power of high-quality data for critical infrastructure.